A world where cutting-edge machine learning models and robotics work seamlessly is no longer a distant vision. Recent improvements in machine learning technology have transformed how machines perceive and interact with their surroundings.

Our open-source platform empowers developers to configure, control, and deploy machine learning models on machines. This allows developers to integrate and leverage other open-source technologies to enhance their devices in a variety of ways. With platforms like Hugging Face, the go-to platform for machine learning enthusiasts, developers can use publicly available open-source models and datasets in their computer vision and machine learning projects.

Viam has opened possibilities for using these models on machines in the real world through the custom vision service integration on our registry. Whether you’re prototyping the next big thing in robotics or just looking to automate a home improvement, this integration provides you with the tools to build faster and smarter machines.

Seeing intelligently: Machine learning meets robotics

For robots to truly interact with their environment, they need to see and understand the world around them. That’s where Viam’s vision service comes in, supporting advanced features like real-time object detection, classification, and 3D segmentation.

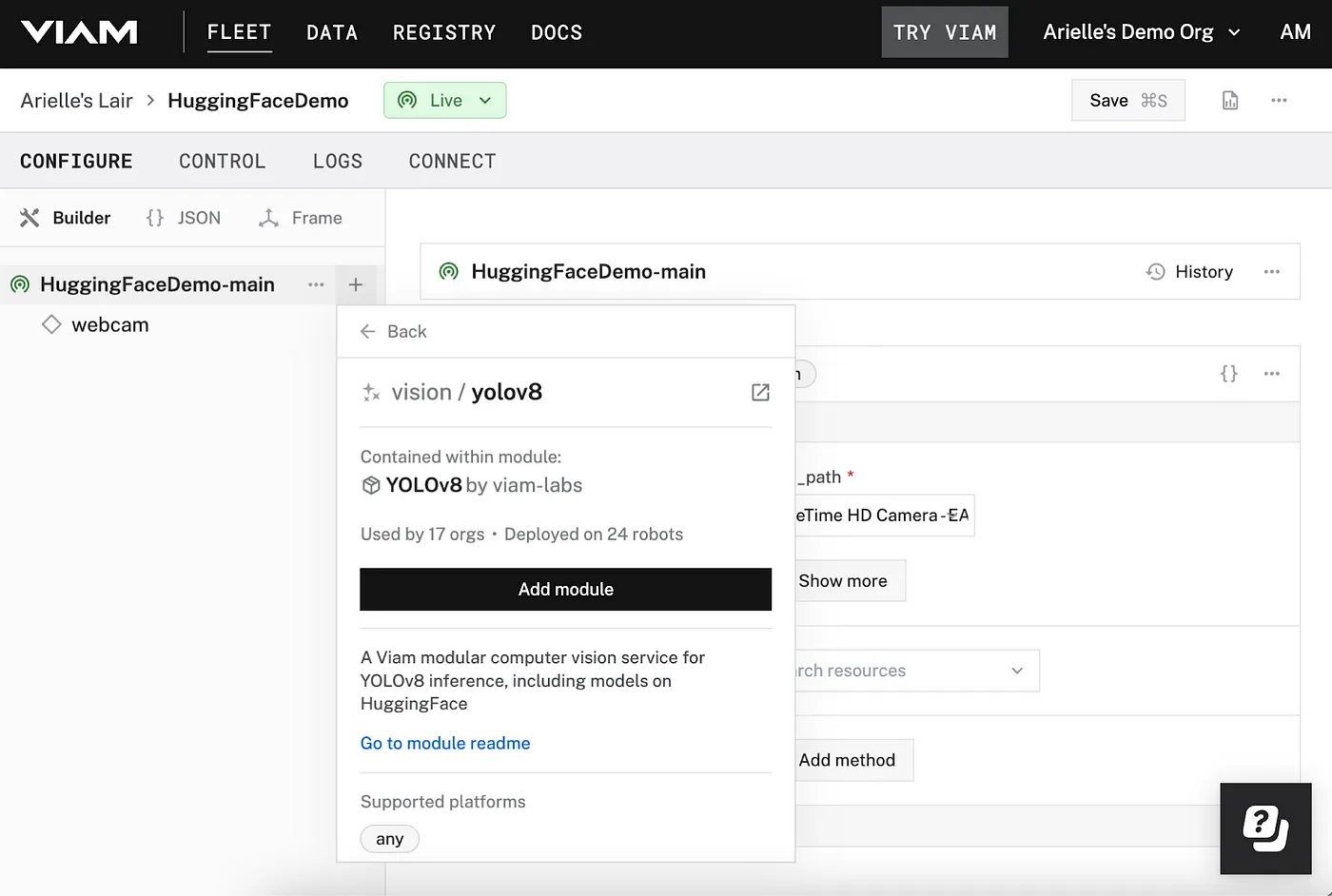

Now, imagine enhancing these capabilities by seamlessly integrating Hugging Face models into your robotics projects. With custom vision service modules like YOLOv5 and YOLOv8 available in the registry, you can do just that—no upfront coding required.

YOLOv5 is the go-to choice for its ease of use, while YOLOv8 is perfect for those wanting precision and speed. Whether you’re looking for quick deployment or top-tier performance in dynamic environments, community-contributed modules on Viam’s registry have you covered.

To learn more about these models, head to our YOLO model guide.

Getting started: Deploying Hugging Face models on Viam

Setting up a Hugging Face model on a Viam machine is straightforward. Start by creating a machine instance on the Viam app—no fancy hardware needed—just a computer and a webcam is necessary.

Follow the setup guide in the app to connect your machine to viam-server. After that, configuration is simple: configure your webcam, and add the YOLOv5 or YOLOv8 vision service, then specify the model’s location, either from Hugging Face or a local path. Set up a transform camera component for real-time classifications or detections in the app. After that, you can write scripts using our vision API. You can follow a step-by-step tutorial on Hugging Face’s blog.

Then you have the choice of picking any compatible model available on Hugging Face. Want to classify your sneaker collection to understand which brands are your favorite at a glance to declutter your closet? Choose a shoe classification model from Hugging Face, like the YOLOv8 Shoe Classification model.

Or what if you want to build a safety system for construction sites to ensure all workers are wearing hard hats? Choose a hard hat detection model and design a security system that notifies you when workers are not wearing protective gear on a job site. Manage how many machines are in a warehouse or construction site using this construction safety model.

What’s next?

The integration of YOLO models onto the Viam platform allows for rapid prototyping and deployment across various applications—from security systems to warehouse automation or even home automation projects. After testing models directly in the Viam app, you can further customize your applications using Viam’s APIs and flexible SDKs.

Explore the Viam Registry and start contributing to this growing open-source ecosystem. Whether you’re working on home improvement or developing the next breakthrough in robotics, now’s the perfect time to dive in and start building with Viam.

.png)