Create a custom AI assistant with local data security and no usage charges in less than 50 lines of code.

I've never bought a house plant. And yet, somehow my house and porch have slowly started to fill with all varieties of flora and fauna. Even with my aversion to seeking them out (thank you, all our plant-gifting friends!), I do need to keep the leafy beings alive and healthy. To help myself and anyone else who may be plant-sitting, I built an AI-powered greenery consultant that could identify the various species and provide helpful tips for taking care of the growing population within my home.

This smart machine uses speech-to-text to listen for a question, recognizes the plant type using a pre-trained machine learning model and computer vision, and passes that context into a local large language model (LLM) before sharing the response out loud using text-to-speech. This provides a natural and intuitive way of interacting with your new assistant while keeping the data and processing in your control.

The plant identification model

This project might sound like a lot to put together from scratch, but Viam's built-in and community modules make it possible to compose each of these capabilities together; adding or removing features as needed while being independent of specific hardware components, software packages or ML model types. For example, when I approached this project I knew I needed to use a machine learning model to recognize plant types, but was not sure which model.

- I knew that Viam makes it easy to train and create your own custom ML models, but I did not feel comfortable enough training a model on plants I know nothing about.

- Next, I looked in the Viam registry - as a new feature was just released that allows people to share and use ML models directly on the Viam Registry. I found a model that my colleague Arielle had created at a plant store when working with Nvidia on a webinar to highlight Viam’s integration with Nvidia’s Triton server, but since it was for demo purposes it was only trained on 5 plant types.

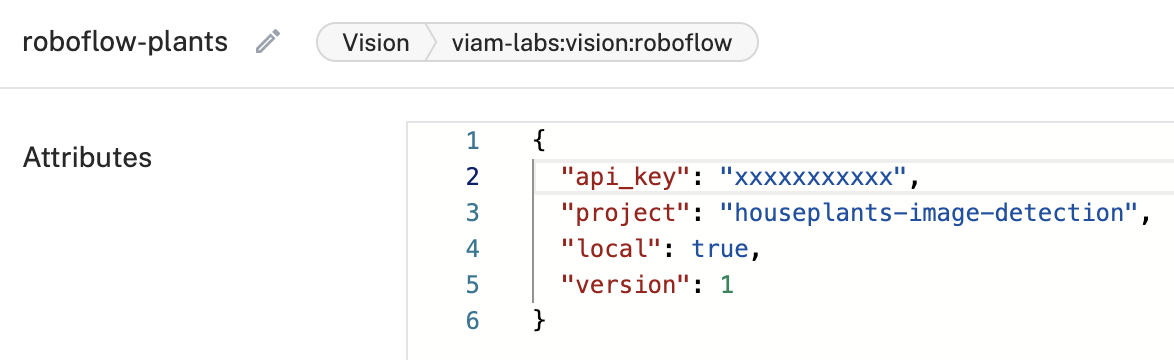

- I searched around the internet, and found a model for houseplants that was trained on over 6 thousand images - could it work with Viam? Yes, because the Viam Registry has modules that allow you to point to and use Roboflow and HuggingFace models.

To use any of these models, first choose the kind of model and add a couple of details.

Then, whether it be a model you trained yourself, a model you find on the Viam Registry, or a HuggingFace, Roboflow or DirectAI model, you can “see” the world with the same single line of code:

Large language models (LLMs)

LLMs (Large Language Models) like ChatGPT have gained a lot of well-deserved attention in the past year, and have been integrated into many services in most industries. However, only now are we seeing LLMs that are both small and useful enough to run locally on portable devices. This gets interesting for a few reasons:

- LLMs in the cloud can get costly, as they charge for API access.

- For some use cases, you want to maintain privacy and not have to worry about your interactions with an LLM going to the cloud and potentially even be used to add to the LLMs knowledge base (imagine a custom LLM used for corporate document analysis, for example).

- A local LLM can maintain its own knowledge base and does not rely on internet connectivity.

A Viam module exists that allows you to deploy a local LLM to your machine in just a few clicks. This module currently supports TinyLlama 1.1B (will later support additional models), which provides reasonably accurate and performant results on small, portable single board computers like the Raspberry Pi 5 and OrangePi 5b.

For my use-case, the AI LLM can cover a wide range of topics, such as watering frequency, lighting conditions, temperature preferences, and fertilization schedules. The LLM's ability to understand context and generate personalized responses makes it an ideal tool for guiding users in their plant care journey. The model and prompt can be tuned to be more specific or broad, depending on the use case.

Get the local LLM running

Viam allows you to be mostly hardware-agnostic, so you can try this all with a Macbook, but because I wanted to create a small portable device I used the following:

Materials:

- A single-board computer (Orange Pi 5B or other octa-core CPU for best performance, a Raspberry Pi 5 will also work)

- MicroSD card

- USB webcam with microphone

- Portable speaker (I used a USB speaker)

- Power supply for the board

The first step in this project build is to install viam-server on the single-board computer (SBC) with the Viam installation instructions. Then in the Viam App, add a new machine instance. I named mine “smart-assistant”, but you can choose another name if you want.

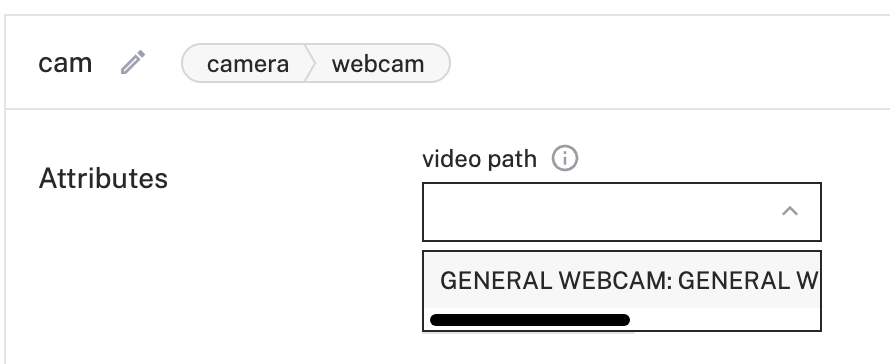

We will now set up the components and services, beginning with a camera. Viam has support for a variety of cameras; we chose to use a webcam, which generally can be set up with just a few clicks as Viam will auto-detect any attached cameras.

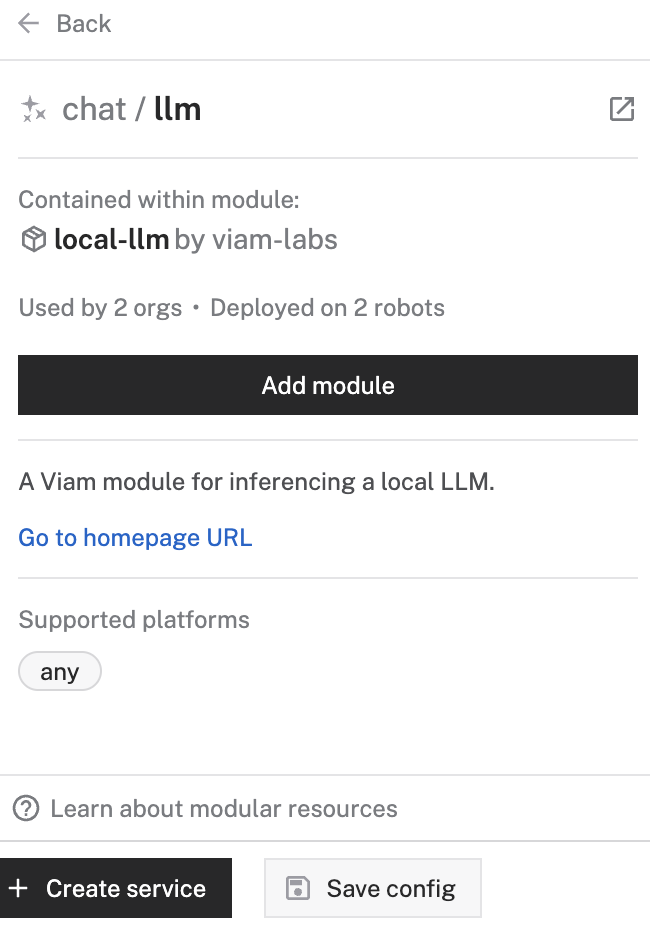

Now, we can start adding capabilities to our machine. First, we’ll add the local LLM, which we can find in the Service configuration menu, under ‘chat’.

Configuration attributes are documented in the local LLM module README, but we can start with the defaults.

After configuring the LLM module, we can test it by running a small Python script using the Viam Python SDK. If you’ve not installed the Viam Python SDK, install it and then install the chat API used by the local LLM module by running this command on your terminal:

Then, create this script on your machine, but substitute api_key, api_key_id, and your robot address with the correct values from the Viam app’s Code sample tab.

Running this script should produce a result like:

“A robot is an autonomous machine designed to perform specific tasks with limited human control. They are programmed to follow predetermined instructions or rules, making them highly efficient and reliable. Robots are used in various industries, from manufacturing and assembly to healthcare and transportation.”

You now have a LLM running and generating responses all on your local machine!

Add a speech interface

We’ll want to interact with our local LLM in a natural way, so the next thing we’ll add to the project is a module that gives our machine capabilities to:

- Listen to us speak and convert that speech to text that we can pass to the LLM

- Convert the LLM response to audio so we can hear the response spoken aloud

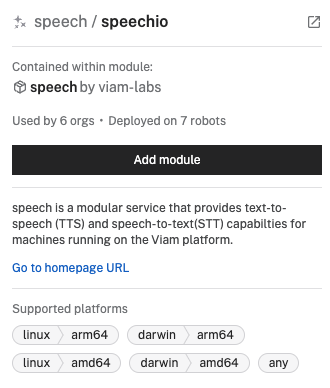

We’ll use a speech module from the Viam registry with these capabilities. To configure it, we’ll go back to the Services configuration tab in the Viam app and choose the speechio module.

There are options to customize the speech module’s behavior. Since we’ll want to be able to talk to our plant assistant, we need to allow the module to listen to the microphone, and also to have a trigger prefix (the speech that will trigger a response, you can set this to whatever you prefer). Add this configuration to the attributes field of the speech service, and save.

Once you’ve configured the module, add the speech API in the location you are running your Python scripts by running this command in the terminal:

Now update your script to import the speech API, initialize the speech service, listen, and have the speech service speak the LLM response out loud.

We will have the script running in a loop. When the speech module hears a command, it will add it to a buffer. We’ll pull that command out of the buffer and send it to the LLM.

You now have an AI assistant that you can converse with when you run this script and say something that is prefixed with the trigger you chose, like:

“Hey there, how often should I water my ficus plant?”

“Hey there, does my spider plant need a lot of sun?”

“Hey there, my cat peed on my verbena plant, what should I do?”

.png)