I attended the 95th New York Hardware Meetup on Smart Cities and IoT at Viam headquarters and during the mingling period, I met a very interesting and fun attendee named Abigail. She told me she is a confectionery roboticist making bizarre cakes. Listen, two things make me very excited: desserts and robots. So I was already hooked. She showed me her website and our love story began.

Knowing that Viam was hosting a team event soon, I proposed the idea of collaborating with Abigail to create an interactive smart cake for the occasion. We arranged a meeting to discuss the project's scope, materials, and potential features. During our conversation, we delved into a recurring joke at Viam where everything is humorously referred to as a camera in principle. The way we define cameras at Viam is unique. An ultrasonic sensor can be a camera, a lidar can be a camera, a bump sensor can be a camera. This led us to ponder: why not consider a cake as an edible camera component?

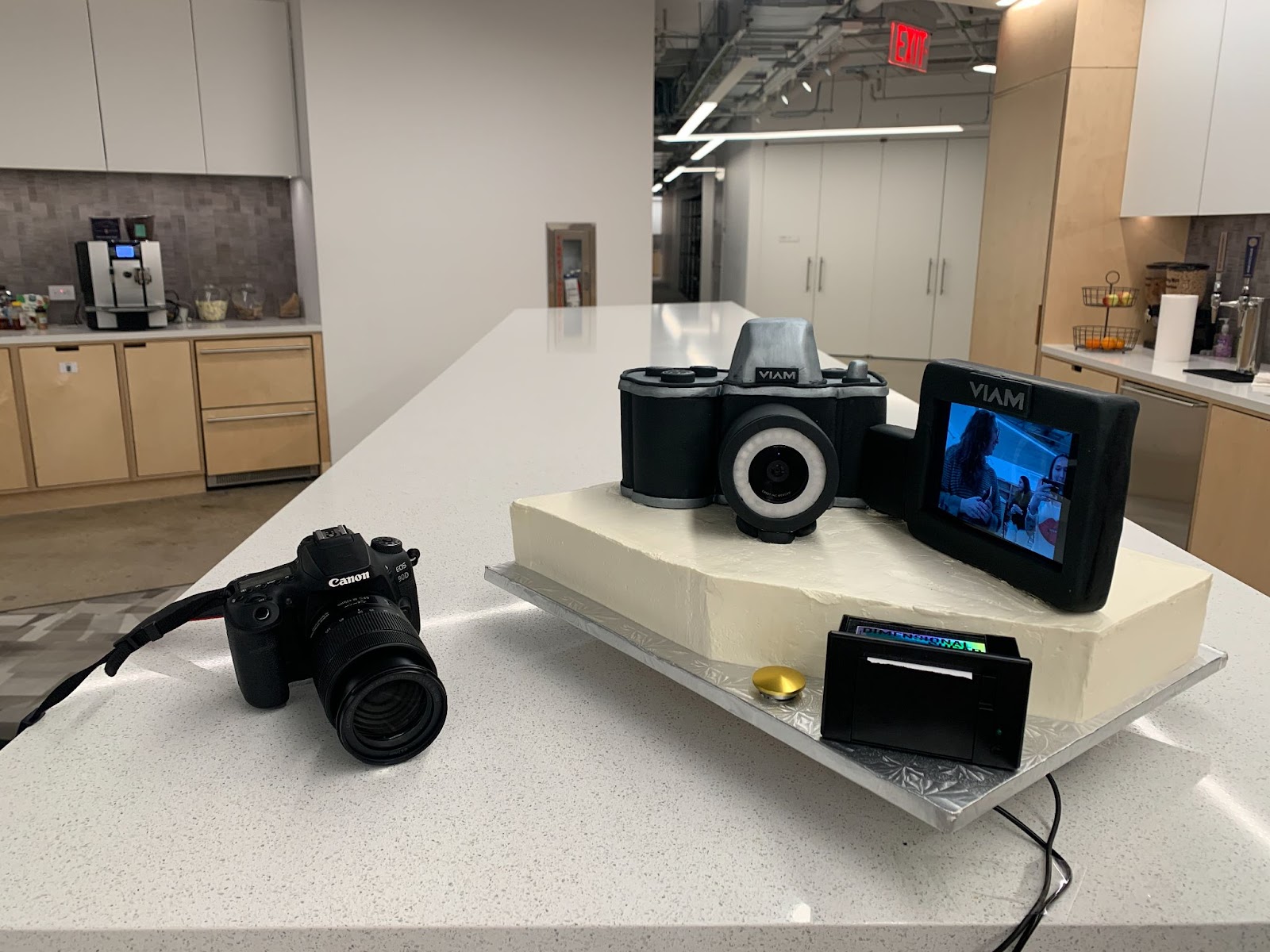

This is the cake we made, which is also a camera, posing with a camera, photo taken with yet another camera:

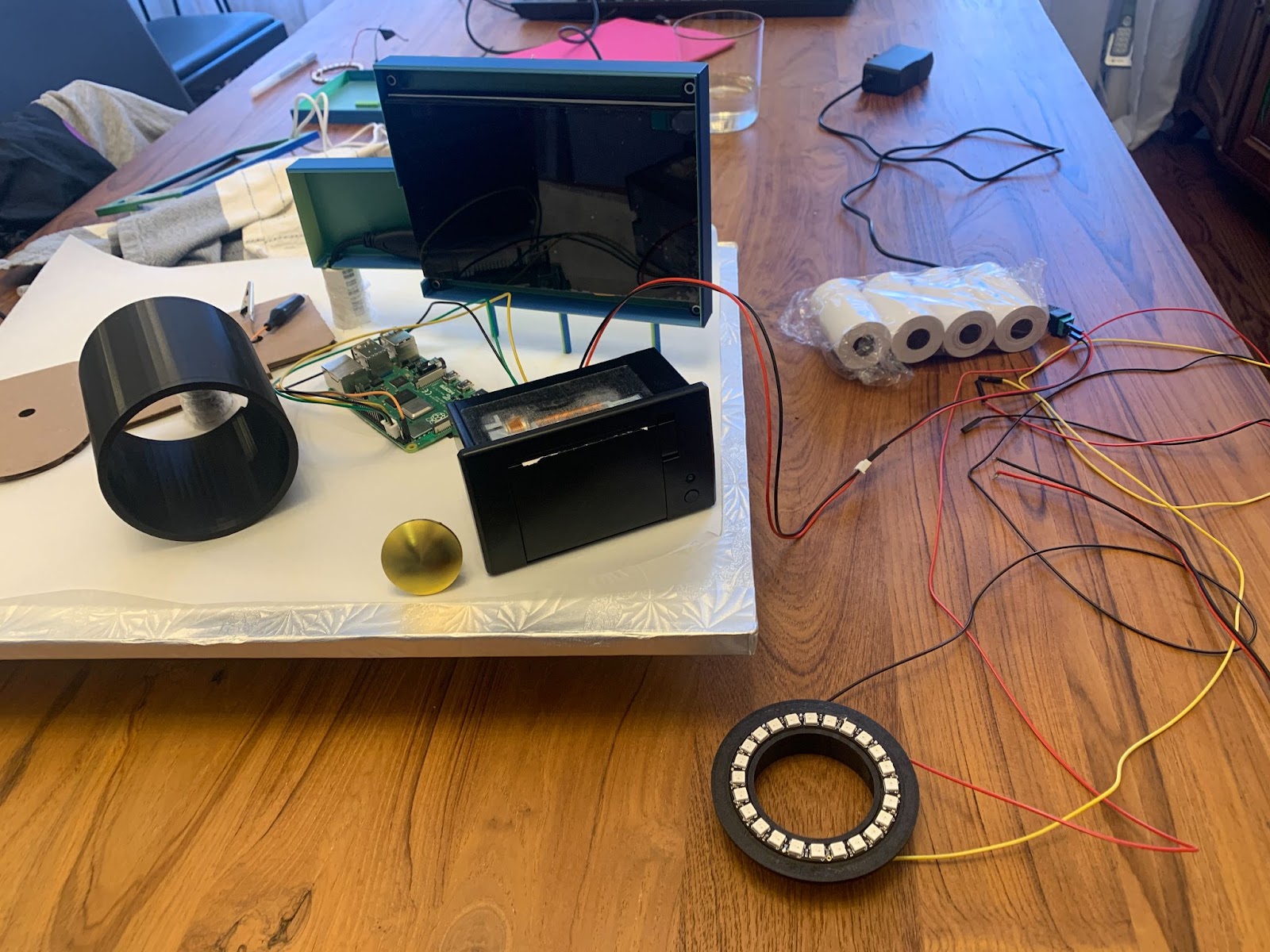

Our brainstorming session resulted in plans to incorporate LEDs, cameras for people detection, and other interactive components such as thermal printers and displays into the cake. We divided our roles, with me handling the technological aspects and Abigail taking charge of the cake-making process. With our roles defined, we dived into the prototyping phase. Here you can see our initial sketches.

Imagine a cake that not only looks like a camera but houses a real camera inside, equipped with machine learning capabilities. As the smart cake detects people, a vibrant green NeoPixel light lights up around the camera, signaling its awareness. If you are in its frame and decide to capture the moment, you press a button and a white NeoPixel light performs a countdown before snapping the perfect shot. And the experience doesn't end there – post-photo, the cake prints a personalized receipt, preserving the date, event name, and photo number, so that you can get your photo after the event and cherish the memories later on.

Here you can see some photos my interactive camera took of my coworkers and I:

And here how the receipt looks:

Requirements to build a cake like this yourself

If you want to build your own interactive cake, you need the following hardware, software, and modules.

Hardware

- Raspberry Pi, with microSD card, set up following the Raspberry Pi Setup Guide

- Raspberry Pi display

- 5V power supply

- 24 RGB Neopixel LED ring

- Adafruit thermal printer

- Thermal paper receipt roll 2-1/4"

- USB webcam

- Arcade button

You also need some cake supplies like cake paint, cake mix, food coloring, fondant, etc.

Software

Modules

I used the following modules from the Viam Registry to interact with the neopixel and camera components.

These have their own software requirements but as you add the module to your machine, they will get installed automatically.

You do have to follow their Read Me’s to configure them correctly on your machine, which differs from module to module.

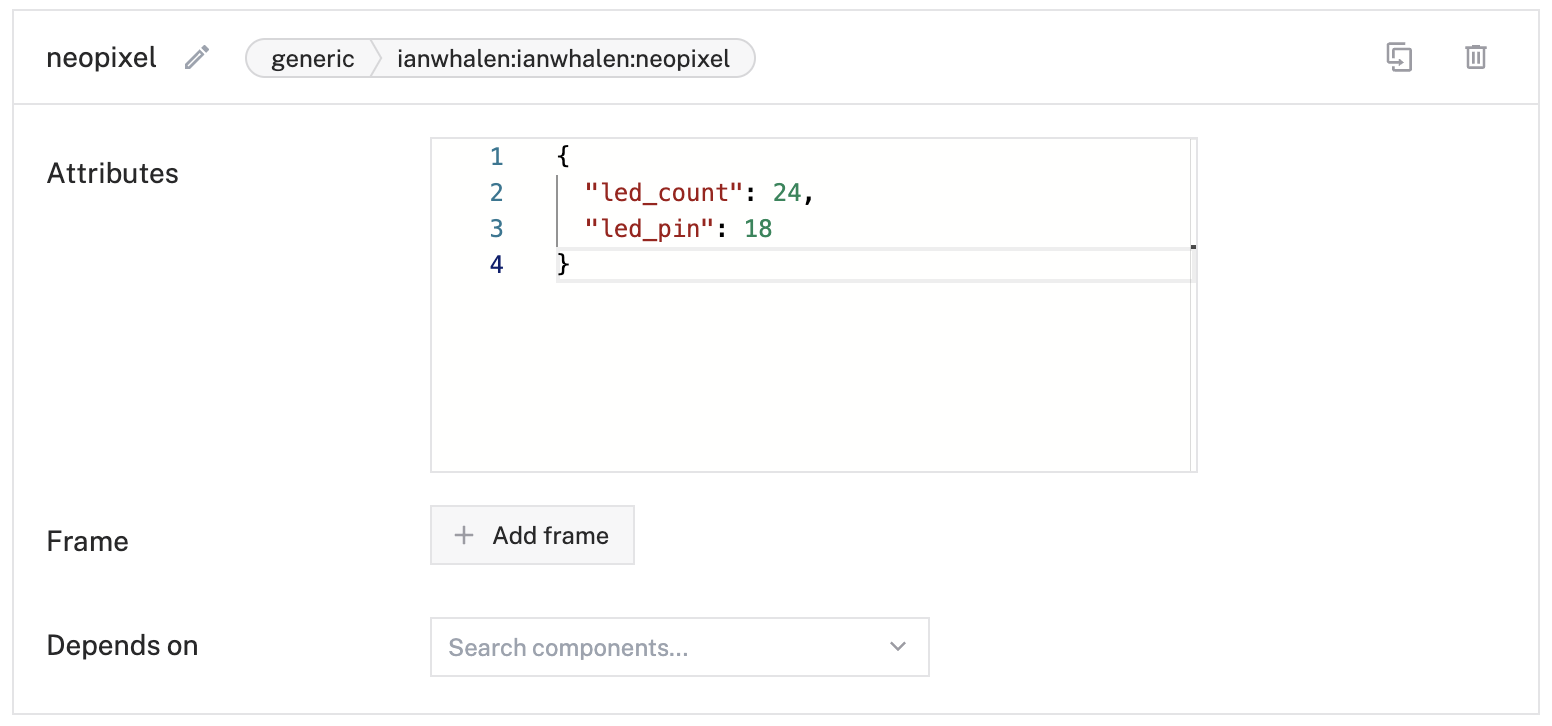

For the neopixel module, my configuration looks like this in the Viam app:

And my face-identification module configuration looks like this:

Steps, if you need a cake yourself

Wire your electronics

Wire together your Raspberry Pi, NeoPixel, thermal printer, USB camera and power supply according to their wiring diagrams.

Wire the NeoPixel ring to the Pi

Make sure you wire the NeoPixel ring according to the guide below:

- Pi 5V to LED 5V

- Pi GND to LED GND

- Pi GPIO18 to LED Din

On the Raspberry Pi, NeoPixels must be connected to GPIO10, GPIO12, GPIO18, or GPIO21 to work so it's important to follow this step.

Wire the thermal printer to the Pi

I wired my thermal printer according to the guide below but yours could look different depending on the printer you got:

- Pi GND to Printer GND

- Pi 8 GPIO 14 (UART TX) to data OUT of the printer, which is RX

- Pi 10 GPIO 15 (UART RX) to data IN of the printer, which is TX

As you can see, the TX and RX from the printer go to specific GPIO pins with opposite functions. TX to RX and RX to TX, known as a crossover configuration. You can read more about the thermal receipt printer connections here.

Also, all the Adafruit thermal printer varieties are bare units; they don’t have a DC barrel jack for power. Use a Female DC Power Adapter to connect to a 5V 2A power supply.

Wire the webcam to the Pi

The webcam is directly connected to the Raspberry Pi via USB.

Test your components

Test these components individually to see them working and debug in this step if anything is not working. You can test them in the Viam app. So let’s configure these components there.

Configure your components, services and modules

In the Viam app, create a new machine and give it a name like cake. Follow the instructions on the Setup tab to install viam-server on your Raspberry Pi and connect to your robot. Then navigate to the Config tab of your machine’s page in the Viam app and configure your board, camera, detection cam, and NeoPixel generic component. Then configure your vision service as face-identification. Under the Modules tab, deploy the face-identification and NeoPixel modules from the registry. Follow the ReadMe’s of the modules to make sure they are configured correctly and fully.

Now navigate to the Control tab of your machine’s page and test your machine!

More detailed descriptions of the NeoPixel light capability, facial detection capability, and printing capability are described below in their corresponding sections.

NeoPixel ring interaction

I read this guide to learn about the NeoPixel Rings. It has information about different NeoPixel shapes, basic connections, best practices, and software.

Some steps that I followed to achieve a successful interaction are explained below:

I ran this command on a terminal window when I was SSH'd into my Pi:

The reason is that the sound must be disabled to use GPIO18. This can be done in /boot/config.txt by changing "dtparam=audio=on" to "dtparam=audio=off" and rebooting. Failing to do so can result in a segmentation fault.

After checking everything was wired correctly and was lighting up, I started coding. The module documentation had example code to light 24 pixels in green, one by one. I tweaked it to fit my purpose of lighting up different colors in different ranges at different event triggers.

For the countdown this is how my code looks like:

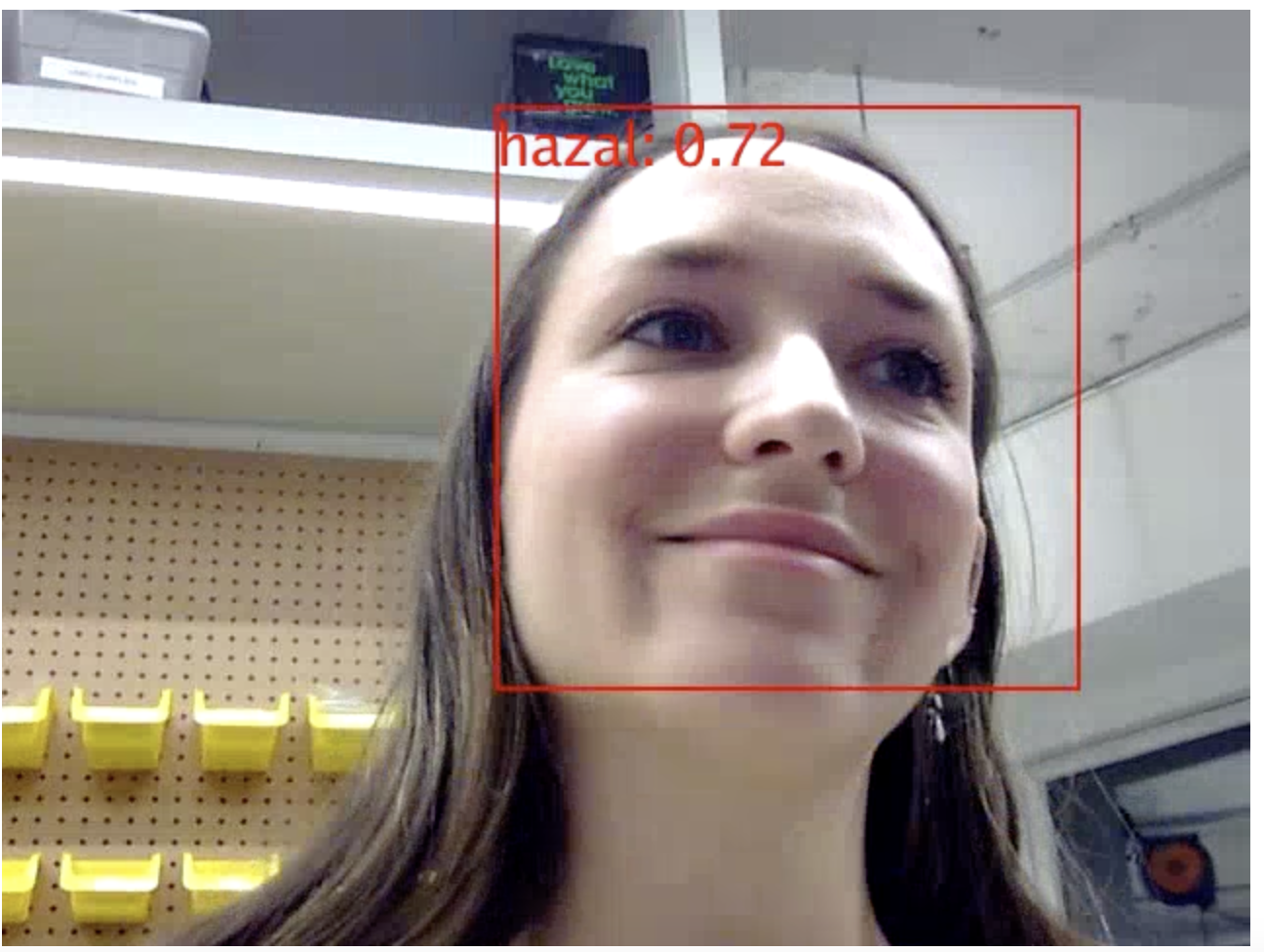

Facial detection capability

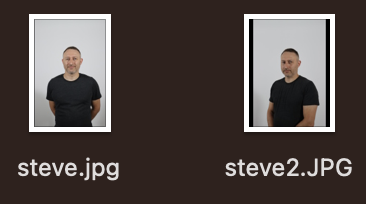

Using object detection, I configured the face-identification module on my machine. I wanted to do specific actions for different people so I added photos of the different people I wanted to detect in a folder called known_faces on the Pi and specified them in my config attributes. For example, I added a few photos of Steve in a folder and pointed to it in the config so if the detection recognized the person as him, it would print out Steve.

I did the same for my photos so I could test the interaction. I started getting detections when I tested my detection camera in the Control tab.

Any person the model doesn’t recognize, it prints out “unknown”, and you can still trigger an event based on that. So the logic is: If it's Steve, do this, if it’s Hazal, do that, if it’s anyone else, do something else.

Display interaction

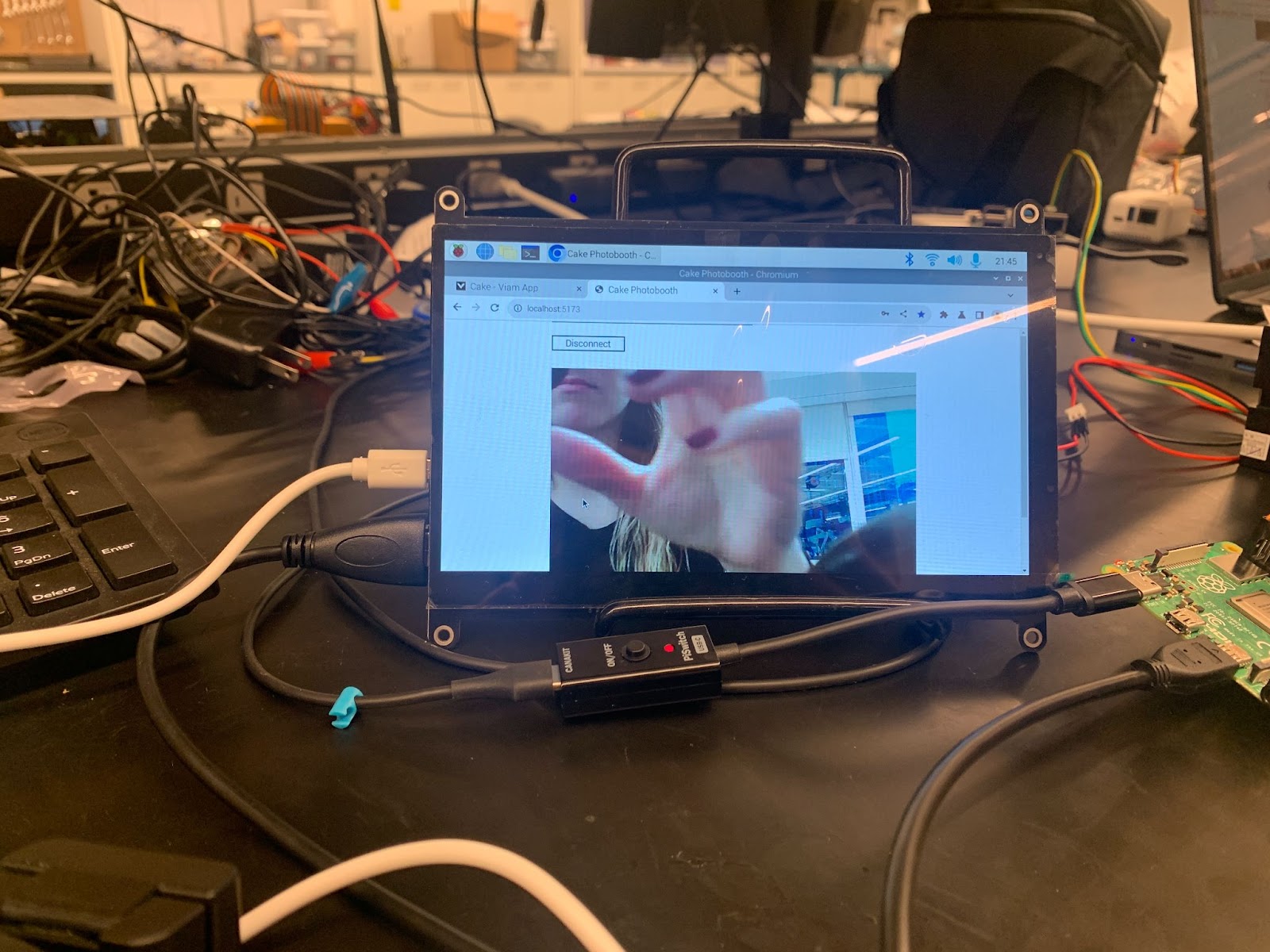

I wanted to have my detections happen in a display so people could use it as a photobooth and pose looking at themselves. I thought I could do this using Viam’s Typescript SDK.

First I had to make sure the language was set to my language of choice, which is English. I went to Settings on the display options, when the display is powered there is a blank screen and a menu, then you can go down (should be a logo that looks Windows), then back to the menu, and then up or down to change language, and then menu again. There are no instructions online so trust me on that.

For some reason, my display said no signal and I used this thread to fix it. Apparently, sometimes the Pi outputs a weak HDMI signal and few changes in settings are necessary. It says don’t do it but I did set hdmi_safe=1 (as that overrides many of the previous options) at the end.

I also installed the full version of the Linux OS for the Pi (not the lite version that is in the install instructions) after fighting with the display for a while. When using the full version of Pi OS, the /boot/config.txt changes are not required.

When I went to my machine configuration on the display, I could see my camera stream which was a success.

But I wanted to make this stream full screen so I started the Typescript portion of the project.

Typescript app full-screen interaction

As I was SSH’d into the Pi, I ran the following commands to install an example app made by Viam (I thought I could take the parts I needed from the example and tweak the rest which worked out well):

In the cd’ed directory:

Here you have to set the env variables, you can find your Api keys under here in fleet and your host is <yourmachinename>.<uniquestring>.viam.cloud. Mine is cake-main.35s324h8pp.viam.cloud as an example. Then I saved the set variables, exited and ran the following command:

I updated the camera name in the example to match my config:

const stream = useStream(streamClient, 'cam');

Mine is camera. If we rename it to detectionCam, it will show the detections.

The example had a lot of motion related sections to move a rover, so I tailored the example to my project needs and took those parts out.

Design your interactive cake, looks matter!

Now that we have our electronics wired and tested, we prototyped how the cake is going to look in Tinkercad with the accurate dimensions of the components.

After the design process, we had a baseline of how the cake is going to look. We wanted to enclose our electronics under the cake and for the ones that are going to be embedded in the cake, we wanted to 3D print covers for it so this step was not just helpful but also necessary.

Full code

You can see the full code in the repository. You should replace your machine’s API key and API key ID if you want to run the same code for your own smart machine.

Assembling the cake

Now that the technical parts are done, we started to assemble the cake itself. I did some taste-test sessions which were super yummy.

These are the photos from the assembly process:

Final result

You can see it in action here (and half eaten):

Honestly I had the best time in the world building this with Abigail. Let me know if you attempt to make one and can’t wait to see all the photos taken with your new smart machine. If this is not your cup of cake, you can head over to our blog "DIY home automation projects" and try one of the other Viam tutorials to continue building other robots. If you have questions, or want to meet other people working on robots, you should join our Community Discord!

.png)