What have you built or tinkered around with in April? As we venture into May, we’d love to hear what our community has been working on—share with us on Discord!

As for us, April was a whirlwind of activity. From taking part in this year’s XchangeIdeas and Robot Block Party events to sharing an inside look at our Fleet Management engineering team, we’ve been connecting with robotics-minded folks from all over.

Let’s dive into what’s new on Viam in April.

New features

1. Model Service: Use any TFLite model with Viam

You can now use any TFLite model you want with Viam! We wanted to make using ML models with Viam more flexible, so it’s now possible to enable machine learning inference through Viam without using our Vision Service. This means you can use other models for object detection, image classification, and more with your robot.

Take a look at how you can use a TFLite model in Viam here:

Please note that you must now use the robot config interface in Viam to add and remove models (you will no longer be able to add or remove models using the SDKs). Learn more about this change in our release notes.

You can also learn more about Viam's built-in machine learning capabilities in our documentation.

2. Vision Service: Now more modular & customizable

Have a specific machine learning approach in mind for vision-related purposes? You can now build your own custom vision service using our APIs.

While Viam’s Vision Service provides built-in algorithms for object detection, image classification, and segmentation, the ability to define your own custom vision service is useful if you want to use a different model or customize the algorithms based on your robots’ image data.

Please note that this update comes with some breaking changes. As a result of the changes, you will now need to:

- Create individual vision service instances for each detector, classifier, and segmenter model.

- Add and remove models as new vision service instances using the robot config.

- Add machine learning vision models by first adding a model to the ML Model Service and then adding a vision service instance that uses the ML model.

If you are using the Vision Service, please see instructions for updating your configurations and code in our release notes.

3. Components: New encoder component and API

We’ve further exposed encoder components in the platform! Used to detect the position or rotation of a motor or joint, encoders are useful for robots that need to operate with high speed or accuracy.

Previously, when you configured an encoder component using Viam, you would be able to see encoder counts by checking the ‘Position’ field in the encoded motor components.

Now, encoder components have their own cards in the Config tab that you can use to directly check and reset the encoder values of your motors. You can also manipulate encoders using our SDKs.

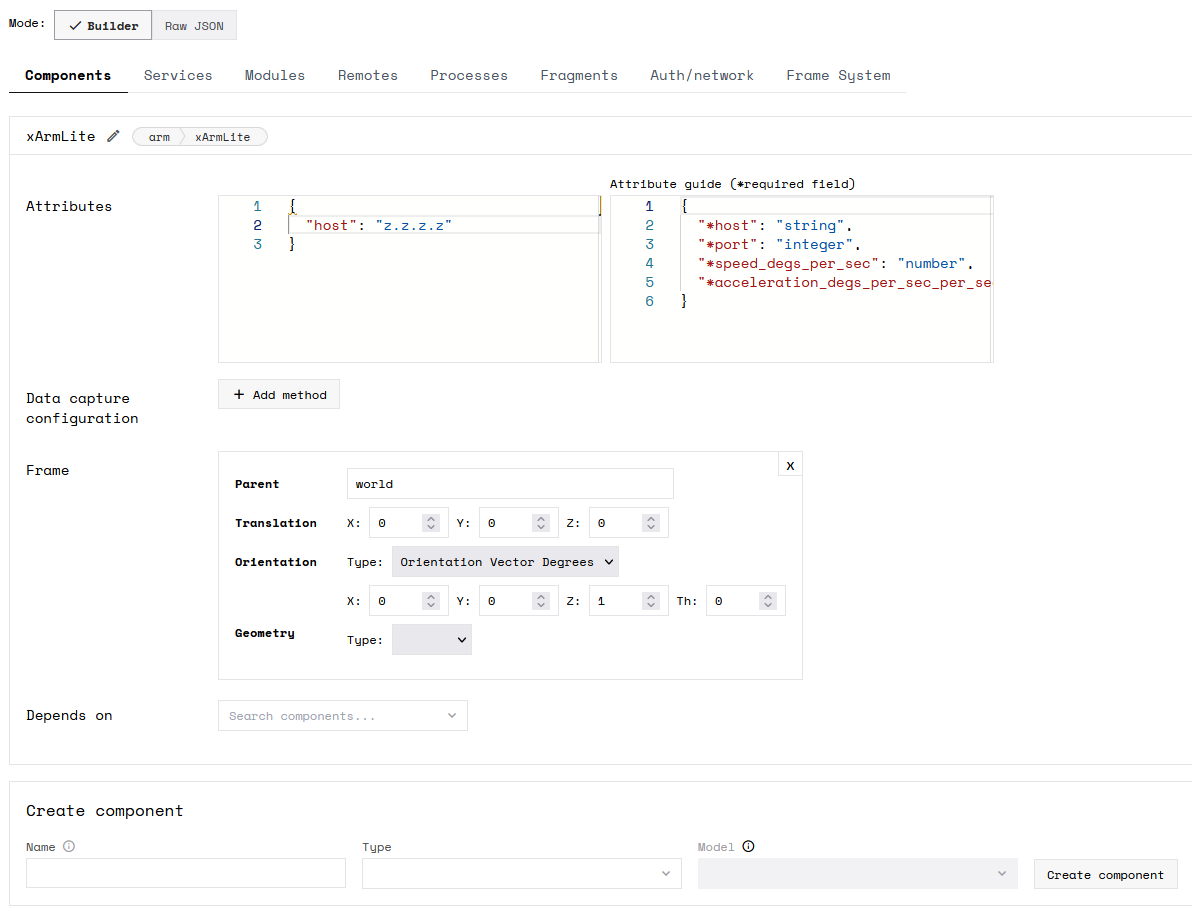

4. Components: New xArmLite component

If you recently got your hands on Ufactory’s new and more affordable robotic arm, the Ufactory Lite 6, we have good news! You can now configure the Ufactory Lite 6 arm in minutes as a component in Viam. Just select “xArmLite” as the model for the “arm” type component when you’re configuring it on the Config tab in Viam.

Learn more here about the other arm models you can configure with Viam, such as the xArm6 and xArm7.

5. Our Python SDK now supports modular resources

Our Python SDK now supports creating custom components or services for your robot as modular resources, which can then be used with our RDK. Learn more about extending Viam with custom resources here.

Improvements

1. Motion Service: Use the Motion interface to pass in obstacles and transforms

Motion planning is pretty useful, especially when you can do fun things like this:

The update: to make controlling your robot a more consistent and predictable experience, we have removed the ability to define a WorldState parameter while using the MoveToPosition method on an arm component.

Our motivation for this change was that the MoveToPosition method does not consider the entirety of a robot’s environment when planning motion for an arm component, so proper placement of obstacles could be confusing.

The complete robot environment, as well as the full structure of the robot itself, is only considered through the use of the Motion Service. Obstacle definition can only be achieved through specific arguments to Motion Service methods, which enables a consistent experience however you are doing motion planning.

2. Vision Service: No longer need to restart viam-server when plugging in a new webcam

Now when you add a new webcam to your robot, you no longer need to restart the viam-server to work with the new camera component. The viam-server now continuously checks for new camera connections, making it easier to add or change cameras.

3. Change out modules on the fly

Now you can update or swap out modules for the viam-server by updating the “executable_path” in the module config, without needing to restart the server.

Take a look at how to do that here:

Bug fixes

Here are some of the bugs we squashed in April:

- Motion: Fixed an issue where arms whose joints are outside control limits would break remote control in App

- Motion: Fixed an issue where arm control was not properly commanding arms to move small distances

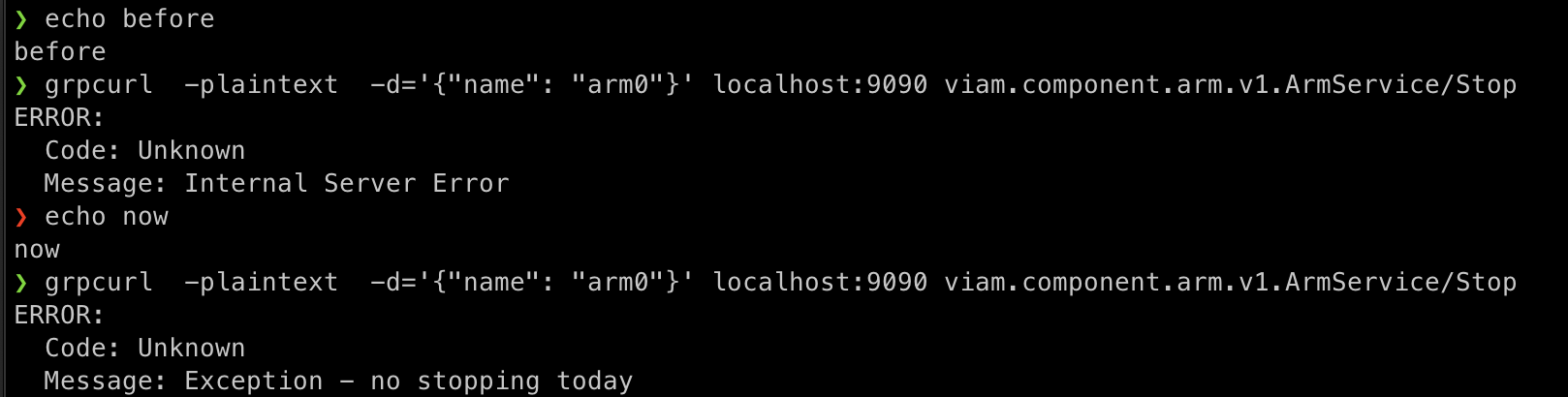

- Python SDK servers or modular resources now return errors that are actually useful

Check out last month's product roundup here, or see our release notes to learn more.

And that’s it for April! If you have any feedback or questions, our team would love to hear from you in our growing Discord community. There, you can also chat with fellow robotics enthusiasts for some inspiration.

May this month (pun intended) bring you fun adventures in robotics!